Why Machine Decisions Are Never as Neutral as We Think

The Invisible Problem

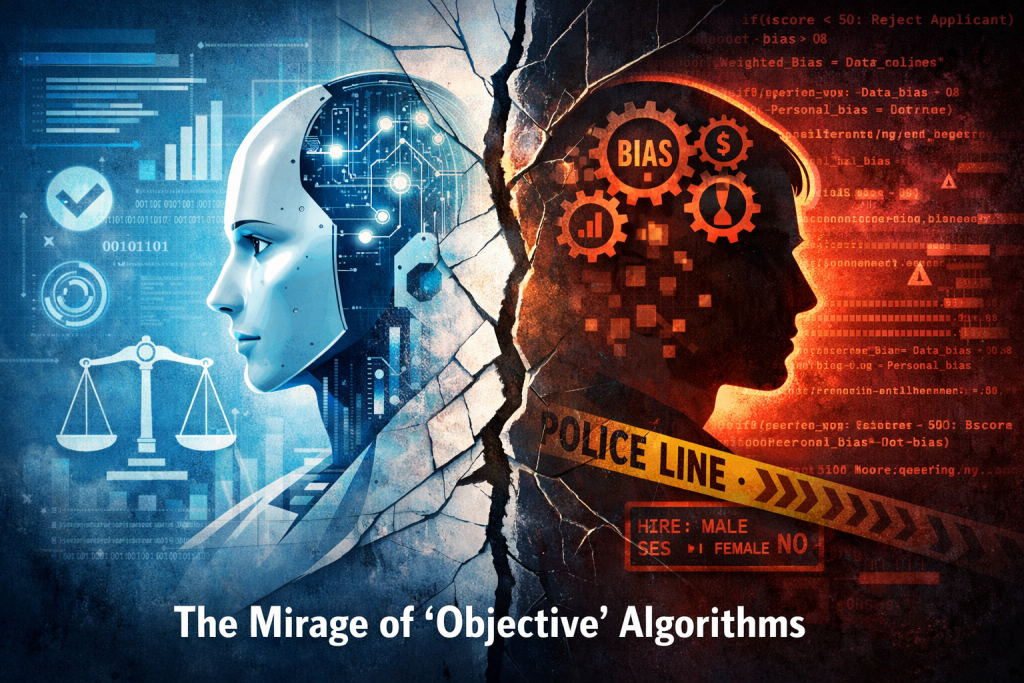

Objective algorithms are often presented as neutral decision-makers, but this belief is one of the most dangerous myths of the AI era.

For years, artificial intelligence has been marketed as the antidote to human weakness.

The narrative is seductive.

Machines don’t get tired.

They don’t have emotions.

They don’t hold grudges.

So the assumption feels natural: if decisions are made by algorithms, they must be neutral, fair, and unbiased.

This belief has quietly shaped how businesses, governments, and institutions trust AI systems.

The truth, however, is uncomfortable:

There is no such thing as truly objective algorithms.

The AI Facade

AI systems do not form opinions the way humans do — but they absolutely inherit them.

Every model is trained on data.

That data is a digital footprint of human history, human systems, and human choices.

What happens next is predictable:

- Biased hiring practices become biased hiring models

- Unequal policing becomes predictive policing

- Historical discrimination becomes “statistical accuracy”

The algorithm does not remove bias.

It mathematically stabilises it.

The illusion of objective algorithms exists because numbers look clean and rankings feel authoritative. But beneath the surface, algorithms are expressing the values embedded in their training data.

Calling this neutrality is not accuracy.

It is denial.

When “Data-Driven” Becomes Opinionated

One of the most misunderstood ideas in technology is the belief that data equals reality.

It doesn’t.

Data is a record of past decisions — and past decisions are rarely fair.

When organisations claim they are “data-driven,” what they often mean is that they are repeating historical patterns with greater confidence. This is where the myth of objective algorithms becomes dangerous.

Once a decision is labelled “algorithmic,” it gains moral distance.

No one feels personally responsible anymore.

“It wasn’t us.”

“It was the system.”

That is not objectivity.

That is outsourced judgement.

The Authority Trap

Algorithms do not just make decisions.

They reshape human trust.

When an AI system produces a score, a ranking, or a recommendation, humans are neurologically inclined to accept it — even when it contradicts lived experience or contextual knowledge.

Over time, a damaging hierarchy forms:

- Human judgement is labelled “emotional” or “unreliable”

- Algorithmic judgement is labelled “rational” and “scientific”

Belief in objective algorithms reinforces this hierarchy and discourages challenge.

The danger is not that AI makes mistakes.

The danger is that humans stop questioning them.

ReviewSavvyHub Analysis: The Reality of Algorithmic Logic

| Concept | The Mirage | The Reality |

|---|---|---|

| Bias | Objective algorithms remove human prejudice | AI scales and hides prejudice in code |

| Responsibility | The “system” makes the decision | Humans use systems to avoid accountability |

| Objectivity | Mathematical logic is neutral | Math expresses a specific worldview |

The Human Verdict

True objectivity has never existed — not in humans, and not in machines.

What humans have always had instead is accountability.

We can question human decisions.

We can demand explanations.

We can assign responsibility.

Objective algorithms promise neutrality, but opacity does not equal fairness. Complex models and technical language often hide value-based decisions rather than eliminate them.

The solution is not to reject AI.

It is to reframe how we trust it.

AI should be treated as:

- a lens, not a judge

- an input, not a verdict

- a tool for insight, not a source of authority

Human judgement must remain the final layer — especially where consequences matter.

The Strategic Verdict

Real progress will not come from pretending machines are neutral.

It will come from recognising a hard truth:

Every algorithm carries a point of view.

The future of responsible technology depends on one decision:

Do we treat objective algorithms as unquestionable arbiters,

or as powerful tools that still require human oversight?

Only one path leads to accountability.

ReviewSavvyHub Judgement

Objectivity in AI is a mirage.

The real danger is not biased machines —

it is humans who surrender responsibility because the machine appears neutral.

Transparency, challenge, and human oversight are not optional features.

They are the price of using AI without giving up judgement.

This illusion of objectivity becomes more dangerous when algorithms actively shape how humans behave and decide. For a deeper examination of this dynamic, read our analysis on The Observer Effect in AI-Driven Workflows.

Transparency Note

This article is part of the AI Reality & Judgement Series on ReviewSavvyHub.

It is independent editorial analysis and is not affiliated with, sponsored by, or written for any AI vendor or platform.