An Industry Reality Check for Creators and Businesses

This article is part of ReviewSavvyHub’s Opinion & Insights series, examining where AI marketing claims diverge from real-world performance.

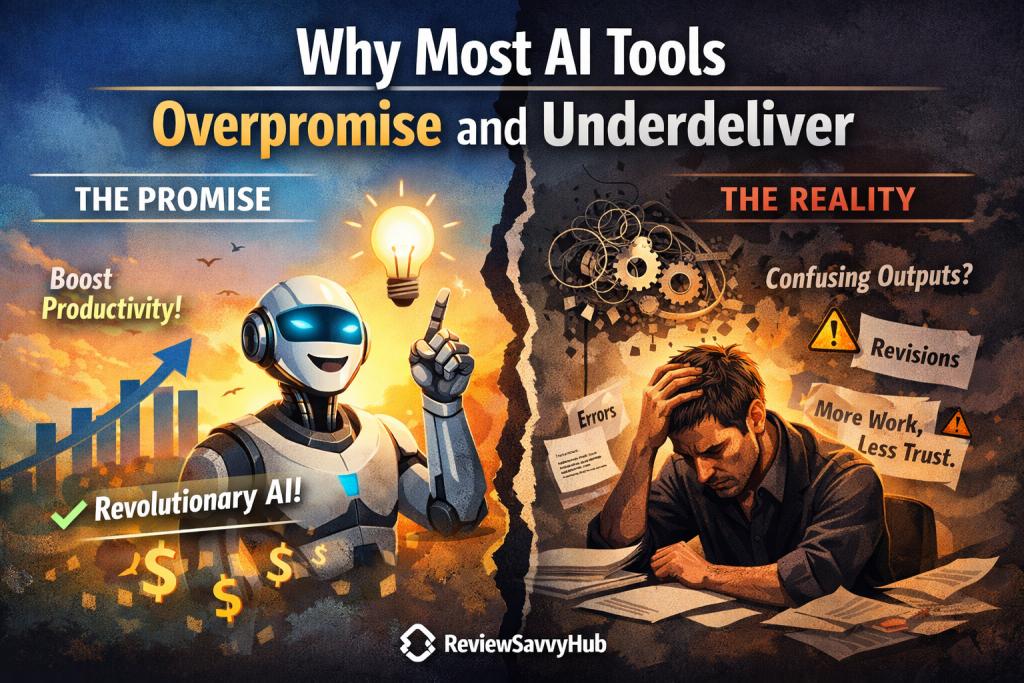

The Promise That Sold the World

Why Most AI Tools Overpromise and Underdeliver is not a failure of technology — it is a reflection of how artificial intelligence is marketed, sold, and adopted.

From productivity apps to creative software, AI tools promise speed, intelligence, and automation. Yet real-world users often experience frustration, dependency, and diminishing returns once the initial excitement fades.

Overpromising isn’t an accident. It is structural.

Overpromising Isn’t a Bug — It’s the Business Model

AI companies are not exaggerating by mistake. Overpromising is baked into how modern software is sold.

Most AI tools operate in highly competitive markets where differentiation is difficult. Features overlap. Outputs look similar. What stands out is not capability, but narrative.

Tools are marketed as if they are finished products, when in reality they are evolving systems that rely heavily on user adaptation, context, and compromise.

What is sold as “automation” often becomes assisted manual work. What is pitched as “intelligence” frequently depends on carefully written prompts and repeated correction.

The gap between promise and delivery is not malicious — it is structural.

The Hidden Cost: Cognitive Load, Not Time

One of the least discussed consequences of AI tool adoption is cognitive overhead.

While AI tools may reduce certain manual steps, they introduce new mental work:

- Learning how to prompt correctly

- Verifying outputs

- Correcting confident mistakes

- Deciding when not to trust the system

Time may be saved in some areas, but attention and mental energy are often lost.

Many users report feeling faster, yet more exhausted — productive, but less confident in the final outcome.

This is why AI tools feel impressive in demos, but inconsistent in daily use.

Why Early Wins Don’t Scale

Most users experience an initial productivity boost when adopting AI tools. The first few days feel transformative.

Then reality sets in.

As workflows become more complex, AI systems begin to show their limits. Edge cases appear. Outputs become repetitive. Automation breaks under nuance.

AI tools are excellent accelerators for simple tasks — but fragile when pushed into professional depth.

The Illusion of “Everyone Wins”

AI marketing suggests a future where everyone benefits equally.

In practice, benefits are unevenly distributed.

Power users who understand systems, context, and verification gain real advantages. Casual users often experience confusion, dependency, or disappointment. Businesses gain efficiency, while individuals quietly absorb risk.

The uncomfortable truth is that AI tools amplify existing skill gaps rather than eliminate them.

Why Reviews Rarely Tell You This

Most reviews avoid discussing these realities because:

- They rely on affiliate relationships

- They test tools in isolation, not over time

- They focus on features, not consequences

- They optimise for clicks, not trust

For a deeper look at how AI systems quietly reshape behaviour and judgement, see our analysis on the

Observer Effect in AI-Driven Workflows.

What AI Tools Actually Do Well

Despite the criticism, AI tools are not failures.

They perform well when used correctly:

- Speeding up repetitive tasks

- Assisting brainstorming and early drafts

- Reducing friction in early stages of work

- Supporting — not replacing — decision-making

The problem is not capability.

The problem is expectation.

The Real Question Users Should Ask

Instead of asking:

“What can this AI tool do?”

Users should ask:

“What will this AI tool quietly change about how I work, think, and decide?”

That question rarely appears on landing pages — but it determines whether AI improves or erodes long-term productivity.

Where ReviewSavvyHub Stands

At ReviewSavvyHub, we believe AI tools are neither heroes nor villains.

They are systems with consequences.

Our goal is not to hype innovation or resist progress, but to expose reality — especially the parts that do not fit neatly into marketing headlines.

Because the most dangerous AI tools are not the ones that fail loudly, but the ones that almost work.

Final Insight

Most AI tools overpromise because promises sell.

Most underdeliver because reality is complex.

The gap between the two is where informed users win — and uninformed users pay the price.

Transparency Note

This Opinion & Insights article reflects independent analysis based on real-world AI adoption patterns across creative, business, and professional workflows. ReviewSavvyHub does not accept paid influence or sponsored narratives in its editorial content.